They're coming, aren't they?

AI agents. Here to steal our jobs and put us all out on the street. (Mine included.)

That's the talk I see everywhere. LinkedIn posts, headlines about layoffs, memes on Instagram. AI is everywhere. Whether we're on board or not, it's here, seeping into how we work, how we think, and how we interact with the world.

As a writer, this hits home. Writing was one of the first skills to get "automated," and I got plenty of concerned questions: Are you quitting? Looking for a job AI can't take?

I felt the fear, too. I found myself Googling whether AI could push me out of my job, while simultaneously testing every AI tool I could find, trying not to get left behind.

It's weird, using the tools people say will replace you. But something changed as I kept using AI. Brainstorming blog ideas, drafting copy, and even proofreading this post. I became... colleagues? friends? With my AI tools.

Suddenly, I could draft blogs that used to take forever. I could try multiple versions of copy without dragging teammates into endless revision meetings.

I watched my team do the same thing:

- Designers used Midjourney for quick mockups

- Developers used Cursor to build features

- Even our founder was building AI apps on weekends

Months passed. I'm still here.

And I finally have an answer to that original question.

Working with AI

I realized that the word "with" matters more than I thought.

AI can generate content, sure. But I'm the one with taste. I shape what it produces. I decide what works and what doesn't. It's not telling me what to do, I'm telling it how to help.

That's where we've landed: AI as a teammate. It handles the repetitive stuff, but the input comes from me. The judgment calls? Still mine.

This comes up a lot working at Copilot.live. Here's what I can tell you: we're not building to replace people. We're trying to make sure human attention goes where it's needed.

AI is already handling the boring stuff

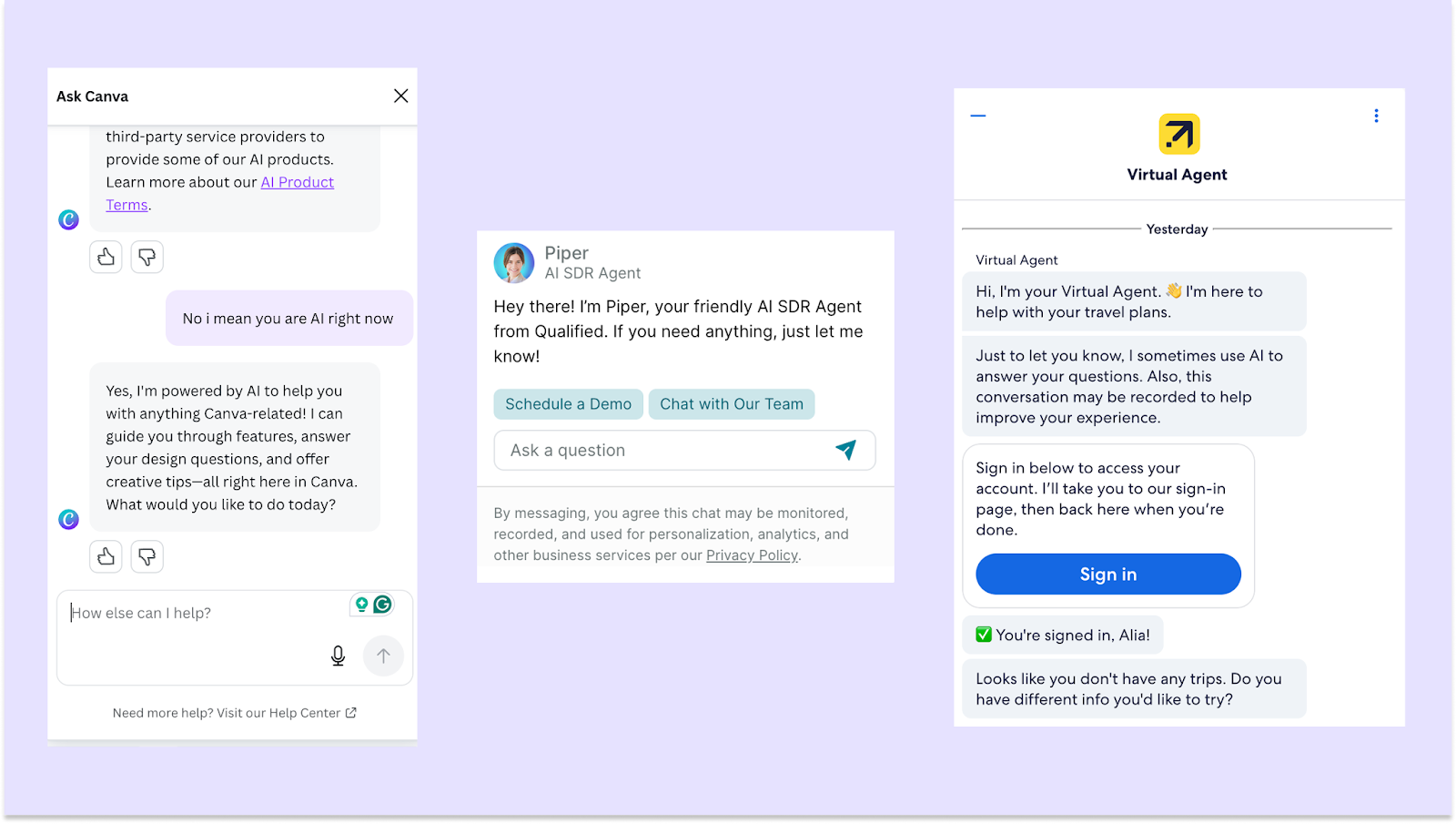

AI agents are showing up everywhere, taking care of the repetitive tasks that eat up everyone's day.

Some examples:

- Bank of America uses AI to handle nearly half of all support tickets, letting human agents focus on complex issues

- Canva AI manages basic help center queries, freeing up the team for more complex design-specific problems

It's not just customer support either. In sales, AI books demo meetings and handles early-stage questions. In HR, it walks new hires through policies and internal FAQs. In ops, it answers the "where's that link again?" type of questions.

Anywhere people are asked the same thing over and over, AI can help clear the queue.

Where humans still matter (and always will)

AI is helpful. But it’s not magic. It still gets tripped up by edge cases. It misses emotional cues. It doesn’t know when someone’s just having a bad day and needs a different kind of care.

The stuff that moves people, the things that build trust, spark joy, and create resonance, still comes from us.

In my head, this splits into two core things: taste and empathy.

Taste

AI can generate things fast. Content, designs, emails, entire websites. But knowing what’s worth making in the first place? That’s still on us.

AI can generate five versions. Only a person can say, “this one feels right.”

We decide what feels right, what cuts through, what should exist at all. And as AI-generated content fills our feeds, that kind of discernment, taste, becomes even more important.

Empathy

This one needs less explaining. Because we all already know it.

AI doesn’t feel. It can mimic a warm tone. It can say “I’m sorry to hear that.” But it’s doing that because a model learned the pattern, not because it understands pain.

Take hiring. AI can scan a thousand resumes, but it can’t tell you who’s going to gel with the team.

That takes a human. A real conversation. A moment where someone says something that doesn’t tick the usual boxes, but makes you feel like, “yeah, this is someone I’d want around.”

The magic happens when they work together

Like I said before, the way I work with AI is probably how we all will soon work with it. Together.

We built Copilot.live because we kept seeing teams hit these exact problems. You want AI to help, but you need humans to stay in control.

Inside Copilot.live, you can:

- Enable human handover when the bot gets stuck, with full conversation context

- View Sentiment analysis, where you can get an overview of how your agent conversations are going, and how to improve them

- Set fallback flows that allow agents to admit they might not have the knowledge to answer the question, and doesn’t force them to hallucinate an answer (More about agents and hallucination here)

- Control what your Copilot knows (and how it talks) so it stays on-brand

- Retrain your agent based on real conversations that went sideways

You're not locked into one rigid workflow. You're building an assistant that knows when to step back and let you take over.

The future of work (As I see it)

We've seen this pattern before. Each major shift—industrial, digital, now AI—pushes us one level up.

From physical labor → to office work → to knowledge work → and now, to judgment and decision work.

AI isn't the end of the line. It's just the next step. It takes over what's repeatable, so we can focus on what's complex, contextual, and human. The teams that figure out how to work with AI instead of fighting it are the ones that stay sharp, move faster, and still feel very much human.